Get Rich or Die Trying: Betting on the Future

Fig.1: Decius Mus consulting the Haruspices (1617) by Peter Paul Rubens

Ways of Knowing the Future

On the eve of battle against the army of an italic tribe, somewhere in an open field near the city of Capua in the year 340 BCE, two consuls leading a Roman army, Publius Decius Mus and Titus Manlius Torquatus, interrogated the will of the gods. Seeking guidance, they consulted the haruspices, seers of ancient Rome, whose practice of divination, the sacral “hepatoscopy,” was grounded in the examination of divine signs on animal livers. To the misfortune of the generals, the feature of the liver revealed to them that this time it would require a larger sacrifice for a victory in the coming battle. According to the haruspices, the situation appeared so grim that a mere animal sacrifice would not suffice to please the gods. To be victorious it would require the ultimate sacrifice, devotio, from the general whose flank would collapse first. “Devotio,” from which our word for devotion stems, was the pious self-sacrifice common to the spiritual order of Roman culture, the ultimate heroic act to ensure the realization of a sublime end. And when, during the battle, Consul Decius Mus saw the morale of the troops under his command begin to dwindle, he mounted his horse, plunged into the enemies ranks, and sacrificed his life for the glory of the Republic, as the haruspices had conveyed. Agitated by this heroic performance, his soldiers marched forward, vanquishing the opposing army and thereby achieving another victory in the rise of Rome. But was the prediction of the haruspices adequate, or the sacrifice truly necessary?

Already the skeptic Cicero derided such divining practices in his dialogue De divination, where he recounts a common bemusement expressed by Cato the Elder, who wondered how haruspices could pass one another without bursting out laughing, and continues his inquiry with the critical question: “For how many things predicted by them really come true? If any do come true, then what reason can be advanced why the agreement of the event with the prophecy was not due to chance?”.1 Without a doubt, such critical queries concerning the prophetic craft were as common as they are today, but it was not until the scientific revolution and the rise of empiricism that the art of prediction was subjugated to more systematic scrutiny. At least, in theory.

Marching in step with the rise of science, our experience has also undergone a lasting transformation as “the scholastic notion of ‘experience’ was gradually modified in the course of the seventeenth century from ‘generalized statements about how things usually occur’ to ‘statements describing specific events,’ particularly experiments.” This readjustment of focus to particulars led to the conceptualization of a “datum of experience, as distinguished from the conclusions that may be based on it,” promising nothing less than “freedom from theoretical bias.”2 Thereafter, measurements—those quantitative “nuggets of experience”—established themselves as a seemingly objective form of representation supposedly detached from theory and became the empirical foundation of modern knowledge production, not only in the natural science but also increasingly in the prediction of our social world especially in the financial aspects of life.

In exchanging the interpretation of livers for tables, one of the first uses of statistical data for forecasting in social settings might have been the mortality rate estimates of John Graunt, a London haberdasher who became the first demographer and epidemiologist according to our historic records, by laying the basis for probabilistic actuarial life tables through his meticulous analysis of mortality tables.3 To obtain the values indicating the probability of a person dying before their next birthday, Graunt used nothing more than the simplest multiplication techniques. Later, these patchy life tables would form the basis for calculating the premiums of the first life insurance policies—mostly to the disadvantage of those they insured.4

Fictitious Capital: A Collective Gambling Disorder?

With the invention of the stock market and the rise of modern banking, bets on the future were drastically expanded in the capitalist system and became de facto the modus operandi of capital accumulation without the necessity of involving investors directly in the operation of enterprises, allowing capital holders to profit from commercial prognosis like never before. Stock investments soon became a mass phenomenon, even Karl Marx traded passionately on the London Stock Exchange, bragging to his uncle Lion Phillips in a letter from June 1864:

I have, you will be a little surprised, speculated, partly in American funds but especially in English stocks, which are springing up like mushrooms here this year (for all possible and impossible stock ventures) and are driven to a quite unreasonable height and then usually burst. In this way, I have gained over £400, and will now, once the entanglement of political conditions affords greater scope, start anew. This kind of operation makes small demands on one’s time, and it’s worth taking some risk in order to relieve one’s enemies of their money.5

Some decades later, in 1882, a year before his death, and in another letter to his daughter Eleanor, Marx ridiculed professional gamblers as “a bunch of lunatics” for believing they could trick a pure game of chance through their “system.” However, using domain-specific knowledge to relieve other speculators from their money on the stock market seemed to be another matter for him. Nevertheless, his hedonistic traits and chronic dependence on the financial support of his family and friends suggest that he may not have had what it takes to be a professional trader. His own speculative “system” must have failed him regularly and the above letter was surely written in one of the rare exceptions when the dopamine rush of financial gains elevated the gambling Marx into delusional heights.

Fig. 2: The Stock Exchange (1810) From Rudolph Ackermann, The Microcosm of London, or London in Miniature, vol. 3, London, 1810. Plate 7, illustration by Augustus Charles Pugun, graphite on ivory woven paper.

Likely Marx was unfamiliar with the first derivatives in his era, but he would certainly have been a connoisseur of options that would allow him to short capitalism. Today he would no doubt be amused by crazes such as the Meme stock incident surrounding the Gamestop short squeeze that drove several hedge funds to the brinks of bankruptcy, as he saw the crisis as a necessary, although not sufficient, condition for the revolution.6 Why not let the movement profit on the way down? Whether or not he would have been successful with such strategies is indeed doubtful, because his theory of crisis did not offer any concere time frame. According to the historian Anne Vogt, the chemist Carl Schorlemmer suggested to Marx that economic crisis might be predictable with the use of differential calculus, which motivated Marx’s dilettante exploration into mathematics as recorded in his Mathematical Manuscripts.7 In a letter to Engels from 1873, Marx wrote: “I have repeatedly attempted, for the analysis of crises, to compute these ‘ups and downs” as fictional curves, and I thought (and even now I still think this possible with sufficient empirical material) to infer mathematically from this an important law of crises.”But with his limited mathematical understanding and lack of economic data, Marx failed to find any proof for this hypothesis and “decided for the time being to give it up.” The idea that history can be modelled in the same way as physics was then left to be taken up by his more injudicious contemporaries.

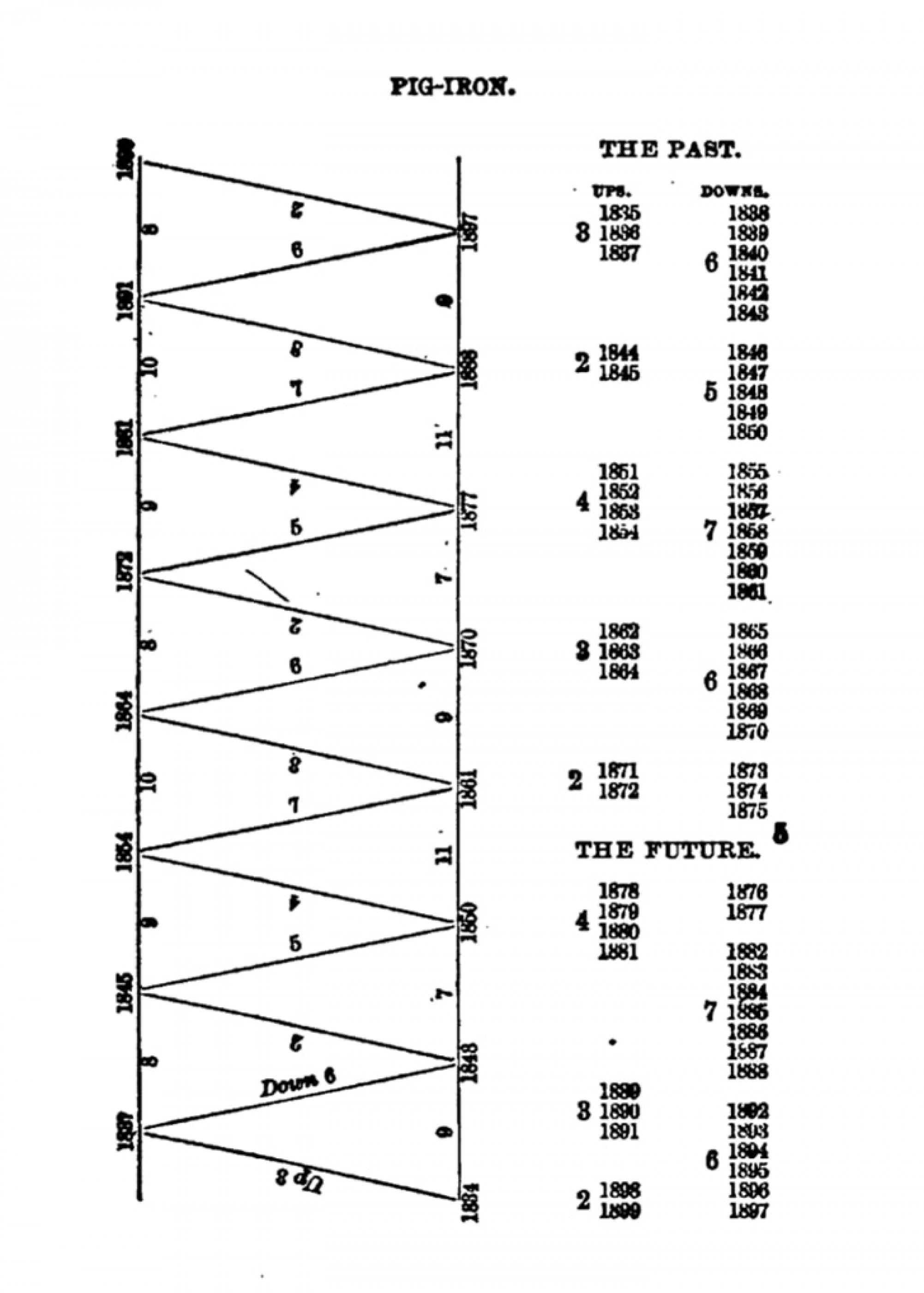

When the “avalanche of printed numbers” rolled over the nineteenth century,8 forecasts based on statistical data steadily gained in importance, in order to address the periodic recurrence of capitalist crises. Although these techniques had been transposed from the natural sciences, in particular meteorology, it is no exaggeration to say that the fields of financial and economic forecasting were also born from the ashes of capitalist crashes. At the end of the nineteenth century, figures like Samuel Benner, a farmer who lost his fortune during the 1873 economic panic, pioneered the field by preparing dubious speculations about the rule-like periodicity of financial panics. Inspired by John Tice’s Elements of Meteorology,9 Benner applied the idea of “cyclicity” developed in meteorology to the prediction of commodity prices. On the basis of the empirical price data he encountered, Benner arrived at the naïve conclusion that the patterns he discovered in the datasets “repeat themselves in definite length” into the future and was convinced that this “law of repetition” holds regardless of contingencies such as wars or technological innovation.10

Benner did not offer a causal explanation for his patterns, but simply proclaimed that these cycles were driven by an empirical law he named the “Cast Iron Rule,” stating that:

without determining a fixed and exciting cause for their existence, or attempting to verify theories of which we are distrustful, we will risk our reputation as a prophet, and our chances for success in business upon our 11 year cycle in corn and hogs; in our 27 year cycle in pig-iron, and in our 54 year cycle in general trade, upon which we have operated with success in the past.11

Similar fallacies, which failed to grasp the nature of probability and which were based on the belief in rigid statistical laws and deterministic cyclicity were still widespread in the early theories of business cycles. They can also be found for instance in the “sunspot theory” of the economist Stanley Jevons—who believed that commercial crises were caused by periodic changes in solar influences determining weather conditions—and in the works of the statistician Clement Juglar, who extracted a six-year business cycle from statistical data, but was more modest in his conclusion by confining the validity to be a mere indicator for a possible looming crisis rather than the deterministic expression of a predictable periodicity. In the last edition of his Prophecies, when Benner felt death closing in, he remarked that “the spirit of prophecy has nearly departed,” and hoped that “some enthusiastic and ambitious person” would take up his work.12

Fig. 3: The Pig Iron cycle, from Samuel Benner, Benner’s Prophecies of Future Ups and Downs in Prices, Cincinnati, 1876

Benner’s plea was answered when, after the stock market panic of 1907, a new breed of forecasting entrepreneurs picked up his trade, selling investors the foresight to navigate the shallow waters of the stock market. Being the most prominent among them, Roger Babson henceforth offered financial reports including what he called a “business barometer,” which again heavily drew—at least metaphorically—upon meteorological forecasting. Although, after Babson, “the forecaster was an instantly recognizable figure in American business,” his forecasting method was in truth nothing but a wild “combination of science and scientism.”13

By the early twentieth century, predictions drew increasingly from quantitative data. However the model generating forecasts during this time remained, for the most part, the human brain. Although Jevons and Juglar, and later Irving Fisher, all introduced high-level mathematics to the field—the latter through the seminal work on business cycles he developed in The Purchasing Power of Money14—and rudimentary statistical methods were already being experimented with, it was not until the 1930s that the models of modern statistics slowly began to claim interpretational sovereignty over the future.15 Yet after the stock market crash of 1929—which, it deserves emphasising, hardly any forecaster saw coming—and countless failed attempts to forecast a preliminary recovery of what would become the Great Depression, the discipline’s reputation was ruined. It would take another crisis, this time a global war, to bring about the leap forward in information technologies and management science which could revive the field and put its methodological developments into practice.16

War, the mother of invention?

Especially in the United States, the Second World War gave rise to a military-industrial-academic complex, which turned out to be a crucial driver of innovation during that time—birthing several important research institutions. Within this assemblage, one that extended far into the Cold War, the first digital computers were variously deployed for cryptographic codebreaking, the simulation of the blast radii for the first atomic bomb, or the calculation of artillery firing tables. In the following decades, these calculative instruments slowly trickled down to the private sector, where they later supplied the computing power to run statistical forecasting models. Before the dawn of digital computers, these tedious calculations had to be performed with pen and paper by arithmetically skilled individuals—called “computers.”17 Not only were their calculations error-prone and slow, but their wages also posed a considerable cost factor, which made statistical forecasting simply economically infeasible for everyone but large state-run statistics bureaus.

Like the computer, the methodological toolset of contemporary forecasting techniques also finds many of its roots in the operations research of the WW2 military apparatus. During the war, the father of cybernetics, Norbert Wiener, developed a filter method for his infamous anti-aircraft predictor to determine the flight path of dodging enemy aircraft.18 Also, the fundamental exponential smoothing method is deeply rooted in the logistics of war, having first been formalized by the statistician Robert Goodwell Brown while serving in the navy.19 In 1944, he applied exponential smoothing “in the analysis of a ball-disc integrator used in a naval fire control device” for anti-submarine warfare.20 After leaving the naval Operations Evaluation Group, Brown joined the Arthur D. Little consulting firm, where he introduced his forecasting method for inventory control.21 Brown’s biography exemplifies the widespread knowledge transfer from military research to commercial use in industry, governmental, and financial contexts that took place in the post-war years.

In the following decades, these techniques and an ever-increasing number of statistical models soon established themselves as essential parts of basically any business management paradigm. This also occurred outside the enterprise, where statistical models were increasingly deployed to predict economic growth, stock prices—or the course of global pandemics. Yet, until very recently, highly sophisticated statistical models did not deliver more accurate predictions in social settings than the simplest ones such as exponential smoothing. This curious fact was discovered in the first accuracy studies, and confirmed by subsequent scientific forecasting contests such as the prestigious Makridakis Competition, named after the statistician Spyros Makridakis who first made this discovery. One could go as far as to say that most advancements in forecasting over the last decades were simply data-driven—rather than being grounded in superior pattern recognition.

After several decades in which statistical forecasting failed to substantially develop beyond those methods developed in the post-war era, things changed when deep neural networks introduced a new interpretative logic, promising to make sense of the future through a seemingly enhanced ability of pattern extraction. Indeed, the way these predictive techniques are able to process vast amounts of data points and also integrate multidimensional data sources into the forecasting model, such as relational data and metadata (data about data, such as the time at and location from which a given message was sent), which prior to this had always been external to statistical models, gives such algorithms a decisive advantage that can drastically increase forecasting accuracy. Yet despite such advances, subjective overrides, based on external domain knowledge, intuitive thinking, and extensive experience, remain the norm when forecasting in social settings. As the statistician Gwilym Jenkins remarked some time ago, “the fact remains that model building is best done by the human brain and is inevitably an iterative process,” as the statistician Gwilym Jenkins some time ago.22 With the rise of machine learning, however, we have entered a stage in human history whereby artificial intelligences might soon create better models than humans.

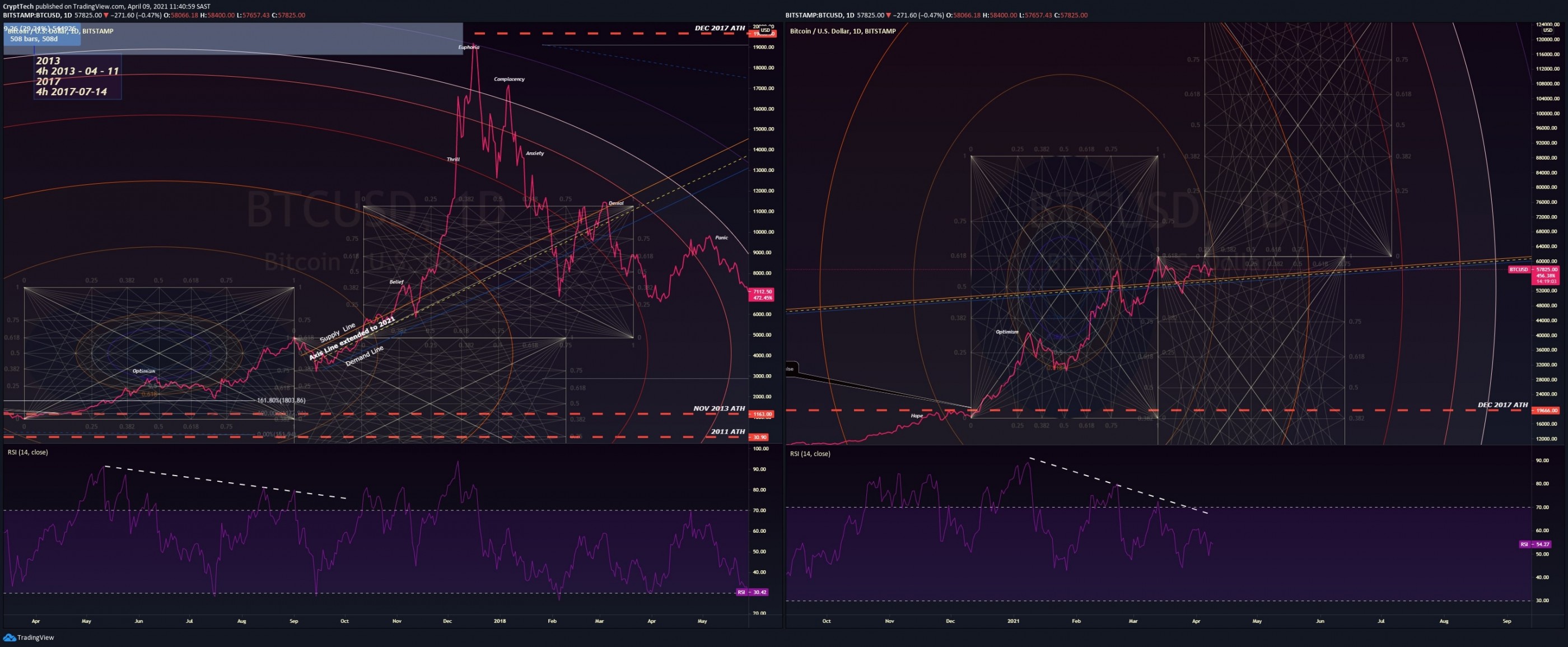

Fig. 4: Bitcoin Trading View Screencap

Mythos and Logos

Far from being flawless crystal balls, such predictive models also have their imperfections, however in many cases, they seem to outperform even the best human experts. Yet even if these algorithms might predict better than humans do, there remains a sacrifice—a modern-day devotio one could say—that has to be performed through reliance on these kinds of models. Not only do such algorithms have an excessive hunger for computational power, and thereby consume extensive amounts of material resources and electricity, they also constitute a modern form of epistemic alchemy, as emphasized by critical voices in the industry.23 We cannot comprehend on what basis their outcomes are generated within the cavernous depths of their neural pathways, thus running the risk of reproducing existing biases, and it is questionable whether the opaque nature of these architectures will ever be transformable into a transparent glass box model that is truly comprehensible for the user.

Despite all the potential increases in forecasting accuracy, we have to remind ourselves that these systems will never produce true certainty over the future, Laplace’s Demon will forever remain the pipe dream of the Promethean spirit. The statistician George Box memorably expressed this epistemic modesty when he affirmed that “all models are wrong, but some are useful.” Despite the use of tools like Aladdin—an acronym for “asset, liability, debt, and derivative investment network”—the risk management system at the heart of Blackrock, the world’s largest asset manager, which informs decision makers in control of more than $21.6tn who carry out over 250,000 trades a day through the platform, no algorithm can accurately predict the oil price for the next day. This is also because—other than with the projection of the trajectory of an asteroid—actions based on such a prediction will alter the future that is predicted. They might get lucky once, and may even outperform the average market participant, but who would be really willing to entrust their hard-earned money to a trading bot that might lose everything on a bad trade unable to articulate the rationale behind its decision?

There exists a widely documented sociopsychological phenomenon called “algorithm aversion,”24 describing how we tend to forgive the mistakes of our kin more easily than those of machines, from whom we demand total mastery. So even though on average these algorithms might do the job better than most humans, they have something uncanny, which erodes our trust in them. Some might say for the better. On the other hand, one might ask, how this differs from the deficiencies of investment bankers, who are also at risk of losing the customer funds, but can at least blame it on their collective gambling addiction. The recent implosion of the FTX crypto exchange caused by high leverage and the mismanagement of the funds entrusted to them is just another case in this ongoing history of human greed, where the effective altruist and kingpin behind the trading platform Sam Bankman-Fried, or “SBF” as he’s more widely known, proudly carries on the torch that has been passed on to him by the likes of Bernie Madoff or Charles Ponzi.25

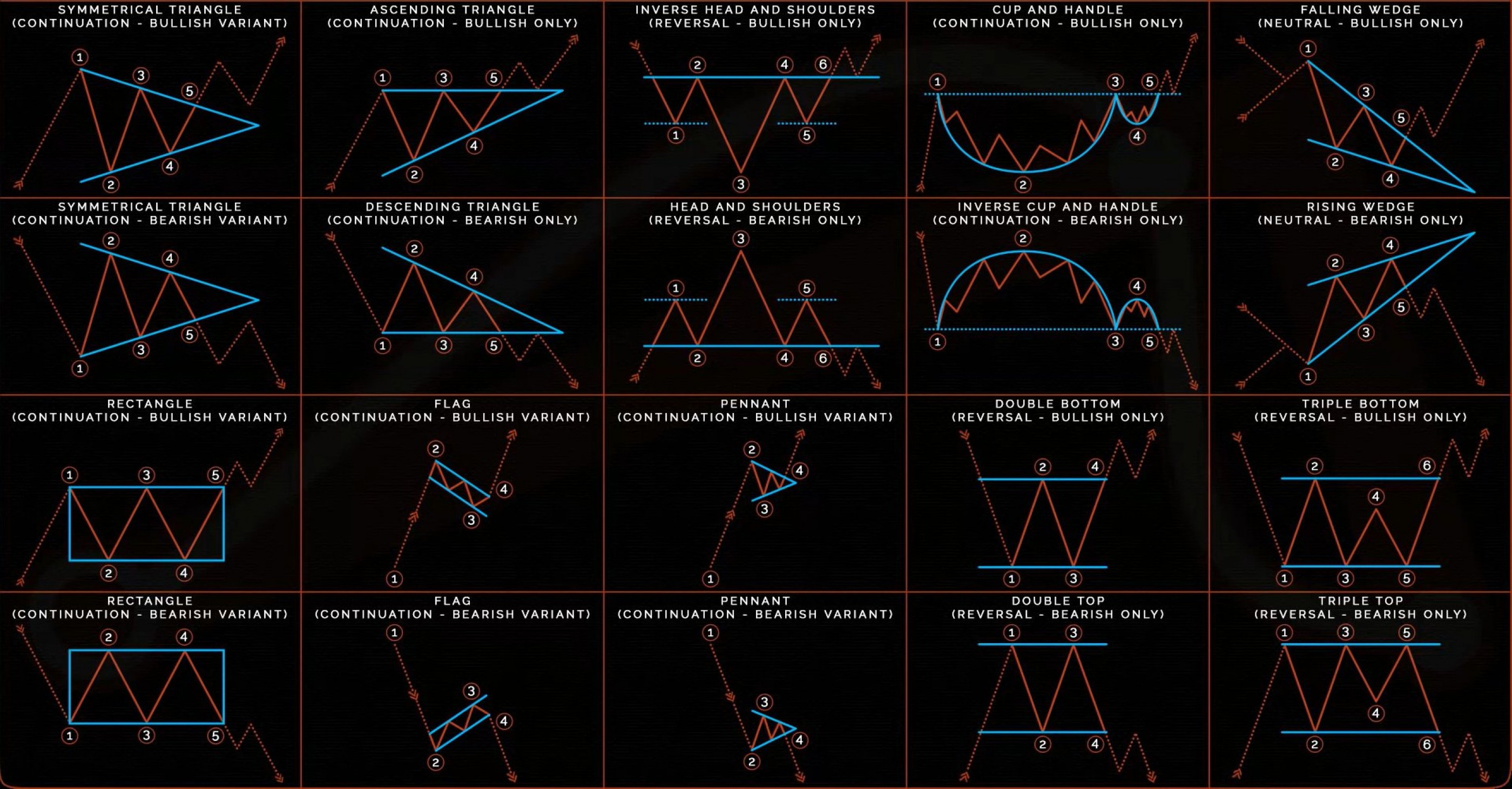

Fig. 5: Common Trading Patterns

At the brink of capitalist collapse, an increasing number of desperate souls, who seek to elevate their precarious social position through high-risk investments in volatile assets such as cryptocurrencies, are drawn back into the arms of dubious financial advisors, who today offer their services on social media platforms. By luring in greedy retail investors to become their FIRE (Financial Independence, Retire Early) mentors—selling coaching material and profiting off affiliate commissions—these self-proclaimed experts rely for the most part on two basic strategies, fundamental analysis, a more qualitative method of determining an asset’s real or fair value, and increasingly the archaic art of pattern trading, or what is also called technical analysis. The chartists, these financial haruspices of our times, that practice the latter craft, discover in the ups and downs of price movements geometric forms like rising wedges, reversed heads and shoulders, or even cups with handles. All of these patterns are endowed with their own predictive meaning, constituting the rationale for the financial decisions of millions of investors today.

In Ancient Mesopotamia, one cradle of our civilization, astrology emerged out of the exact same overwhelming experience of complexity and our hardwired human desire for pattern recognition, when people began looking at the seeming randomness of celestial bodies in the night sky, discovering certain constellations we now know as the signs of the zodiac. This lust for patterns lives on today in the technical analysis of these financial high priests, and with the smell of incense in the air, it seems the myth continues to flourish even in our enlightened world. But will it help me get rich?

Footnotes

Cicero, De Divinatione, translated by William Armistead Falconer, Loeb Classical Library edition: https://penelope.uchicago.edu/Thayer/e/roman/texts/cicero/de_divinatione/2.html ↑

Lorraine Daston, “Baconian Facts, Academic Civility, and the Prehistory of Objectivity,” in Rethinking Objectivity, ed. Allan Megill, Post-contemporary interventions, Durham: Duke University Press, 1994: pp. 37–64. ↑

John Graunt, Natural and Political Observations Mentioned in a Following Index, and Made upon the Bill of Mortality, London: John Martyn, 1667. ↑

In what might have been the first demands for open data, fierce critics of the insurance industry such as Charles Babbage and Thomas Towe Edmonds argued in the early nineteenth century for public accessibility of insurance datasets, which ultimately led to the reform of the industry. Charles Babbage, A Comparative View of the Various Institutions for the Assurance of Lives, London: Mawman, 1826. See also Martin Campbell-Kelly, “Charles Babbage and the Assurance of Lives,” IEEE Annals of the History of Computing, vol. 16, no. 3 (1994): p. 5l; Daniel C. S. Wilson, “Babbage Among the Insurers: Big 19th-century Data and the Public Interest,” History of the Human Sciences, vol. 31, no. 5 (2018): pp. 129–53. ↑

Karl Marx, “Letter to Lion Philips, 25 June 1864,” in Marx Engels Werke, vol. 30, Berlin: Dietz Verlag, 1964. ↑

See Michael Hiltzik, “Explaining the Reddit-fueled Run on Gamestop,” Los Angeles Times, January 26, 2021, https://www.latimes.com/business/story/2021-01-26/gamestop-short-squeeze-stupid ↑

Nelli Tügel, “‘Engels wusste gar nichts, Marx immerhin ein bisschen’: Interview mit Annette Vogt,” Analyse und Kritik, December 13, 2022, https://www.akweb.de/ausgaben/688/engels-wusste-gar-nichts-marx-immerhin-ein-bisschen-die-mathematischen-manuskripte/. ↑

Ian Hacking, “Biopower and the Avalanche of Printed Numbers,” Humanities in Society vol. 5, nos. 3 & 4 (1982): pp. 279–95. ↑

John Tice, Elements of Meterology, Saint Louis: Meteorological Research and Publication Company, 1875. ↑

Samuel Benner, Benner’s Prophecies of Future Ups and Downs in Prices. What Years to Make Money on Pig-Iron, Hogs, Corn and Provisions., Cincinnati: Robert Clark & Co., 1887: pp. 122–3, p. 133. ↑

Ibid., p. 123. ↑

James H. Brookmire, “Methods of Business Forecasting based on Fundamental Statistics,” The American Economic Review, vol. 3, no. 1 (1913): pp. 43–58, here p. 49. ↑

Walter A. Friedman, Fortune Tellers: The Story of America’s First Economic Forecasters, Princeton: Princeton University Press, p. 14; p. 30. ↑

Irving Fisher, The Purchasing Power of Money. Its Determination and Relation to Credit, Interest, and Crises, New York: Macmillan Co., 1911. ↑

Spyros Makridakis, “A Survey of Time Series,” International Statistical Review / Revue Internationale de Statistique, vol. 44, no. 1 (1976): pp. 29–70, here p. 33. ↑

With this military a priori, statistical forecasting follows the steps of logistics. Two hundred years prior, the term logistique was coined by Antoine-Henri Jomini, a general who served under both Napoleon and the Russian Tsar. Nothing seems to demand accuracy, optimization, and efficiency more than the necessity of warfare. ↑

Alan Turing described their rigid work routine in the following terms: “The human computer is supposed to be following fixed rules; he has no authority to deviate from them in any detail.” Alan M. Turing, “I.—Computing Machinery and Intelligence,” Mind, vol. 59, no. 236 (1950): pp. 433–60, here p. 436. ↑

See: Peter Galison, “The Ontology of the Enemy. Norbert Wiener and the Cybernetic Vision,” Critical Inquiry, vol. 21, no. 1 (1994): pp. 228–66. ↑

In comparison to simple moving averages, where past observations are weighted equally, exponential smoothing is a robust and easy-to-learn forecasting technique that assigns exponentially decreasing weights to past data points, thus being able to address the seasonality and cyclicity of data sets. ↑

Galison, “The Ontology of the Enemy.” ↑

Saul I. Gass, and C. Fu Michael (eds.), Encyclopaedia of Operations Research and Management Science, Boston, MA: Springer US, 2013. ↑

Quoted in Robert Goodell Brown, Statistical Forecasting for Inventory Control, New York: McGraw-Hill, 1959. ↑

P. Newbold, and C. W. J. Granger, “Experience with Forecasting Univariate Time Series and the Combination of Forecasts,” Journal of the Royal Statistical Society. Series A (General), vol. 137 no. 2 (1974): p. 131. ↑

Ali Rahimi and Ben Recht, “Reflections on Random Kitchen Sinks,” argmin blog, December 5, 2017, http://www.argmin.net/2017/12/05/kitchen-sinks/. ↑

Speaking of addictions SBF—jacked up on nootropics—was infamous for playing the video game League of Legends during important meetings. In his last interview on Twitter Spaces before his arrest, there were constant clicking noises in the background. After being confronted as to whether he was gaming again, he cheekily admitted to his guilt. ↑

About the author

Published on 2023-01-18 20:00