Don’t Believe the (Criti)-hype

Fig. 1: Jean Marc Cote (if 1901) or Villemard (if 1910), “France in 2000 year (XXI century). Future school.” France, paper card.

I’m sorry to say it’s that time again: the next attack, the next takeover of the world by AI is apparently imminent. As if there weren’t other planetary 1 scale crises to attend to and take care of in these times, the call for a moratorium on AI labs to pause the training of AI systems more powerful than the GPT-4 language model is spreading a drastic doomsday mood. The Future of Life Institute, a transhumanist and longtermist organization that initiated the circulation of this open letter, solemnly proclaims that:

Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?[1]

The dystopian tone surrounding this alleged AI apocalypse is not only alarmist, but also rather irritating on first glance. Hype again, you might think, and stop reading, sighing and rolling your eyes. However, there are a bundle of other problems associated with this letter to which I would like to draw your attention.

The letter is gaining momentum. It is not the brainchild of a few, but has been signed by many influential researchers in the field of AI, not only from industry, but also from researchers employed at elite universities such as Cambridge and Harvard.

While I think it should be evident enough that the letter’s central claim is nonsense—many respected AI researchers argue that technology companies are using “AI” as a marketing term to hype software that cannot do what it claims, and in many cases is dangerous when used in mainstream products, before it’s ready—organizations like the Future of Human Life Institute are trying to convince people that software like ChatGPT is a step on the road to artificial general intelligence, when in fact it’s a text generator, a technically sophisticated chatbot that has no consciousness and no idea what it’s actually doing. Yes, AI is dangerous, but (apart from the question of disinformation) for different reasons than the letter suggests—reasons that should rather be analyzed in the context of surveillance, data theft, and extractivist regimes.

The letter and its repercussions are symptomatic of contemporary times in that it’s an example of “classic” power and economic struggles over agenda-setting, claims-making, discourse-framing, and ultimately AI governance issues that are now occurring at extremely high speed in the age of ubiquitous (social) media.

As a result, this letter has spread throughout the Western mainstream media, which all too often uncritically reproduce its dramatic claims, further inflaming them. Lee Vinsel has used the term “criti-hype” for this process, a form of critical writing that parasitically seizes on and even inflates the hype and in this way “feeds and nourishes on the hype as criti-hype.”[2] This, of course, captures the attention of the viewer. Isn’t an impending takeover by a man-made intelligence much more exciting than arduous social struggles for reproductive rights, housing, or a living wage?

It is not surprising, then, that the term “AI race” is used in a variety of struggles for dominance. It suffices here to mention only a few of these: the race to implement AI for automated weapons systems; the race to dominate markets–since the release of ChatGPT last November, Microsoft and Google in particular have been competing fiercely; and finally, the race to secure funding for independent academic research. Especially “critical and independent AI” research is often sponsored by tech companies themselves, such as the AI Now Institute, which is funded by Microsoft. The open letter has now been signed by more than 27,000 people, including prominent tech bigwigs like Elon Musk and Steve Wozniak, all of whom seem concerned that an AI superintelligence could emerge. So far, so familiar with the latest cycle of AI hype sparked by the self-driving car debate a few years ago—a discussion on the promises and limits of autonomous cars that invoked a similarly alarmist and drastic language of a looming AI takeover by intelligent robots.

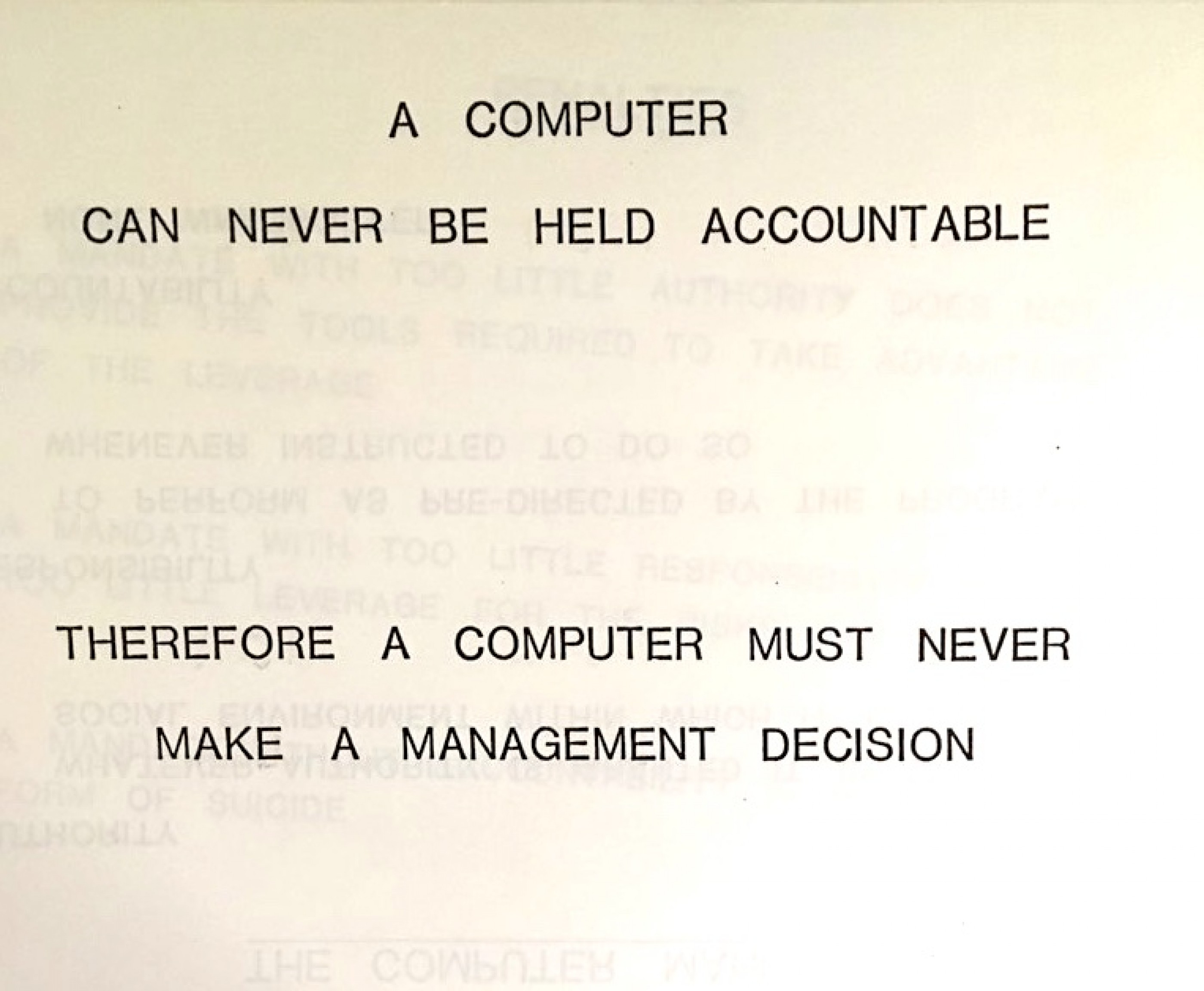

Fig.2: Internal IBM Document from a 1979’s presentation. Source: https://twitter.com/bumblebike/status/832394003492564993?s=20

The Invisible Labor of Critique

I’d like to redirect your attention to another issue. The letter about pausing AI, and by extension the sentiment of people working in the AI industry, ignores the long tradition of critique and the many critical voices both from the tech industry as well as from feminist academic scholarship and activist research. In a heroic gesture, the tone of this letter assumes a position of authority—they know what they’re talking about—and originality—they are the first to foresee the severity of this threat. If it wasn’t so indicative of the power and danger of white male privilege, it would almost be amusing to show how, clearly, if they had any clue about these critical traditions, and thus really knew what they were talking about, they would logically need to abolish their own discriminatory business models. While the open letter does refer in a footnote to the influential 2021 paper “Stochastic Parrots,” in which AI scientists and researchers such as Emily Bender and researcher and tech-worker-cum-whistleblower Timnit Gebru, amongst others, warn of the potential dangers of synthetically generated media—the letter misappropriates this warning to shore up their apocalyptic AI scenarios and ultimately their longtermist ideology.

Consequently, Bender and Gebru, along with other former Google employees such as Margaret Mitchell and Meredith Whittaker, have spoken out against the current open letter, stating that the problem with AI is not superintelligence and that the risks and harms were never about “AI becoming too powerful” and losing control of human civilization in the future. Instead, they remind us that they have been arguing for several years that the problems with AI are about the present day “concentration of power in the hands of few people, about reproducing systems of oppression, and about damage to the natural ecosystem through profligate use of energy resources.” For their courage in speaking up about the discriminatory and surveillance practices in Google’s products years ago, they were not praised by the public media and 27,000 signatories, but instead Gebru was kicked out of her job at Google while no one listened to either of them. After the letter gained momentum, Geoffrey Hinton, the latest Google dropout—dubbed the “AI Godfather”—was praised by the major news media for his courage in leaving Google and criticizing its power. It should be added that when Hinton was still working at Google, he did not support the criticism of his female colleagues.

Fig. 3: Cell phone tower cleverly disguised to look like an evergreen tree. Located in New Hampshire.

So here we have another case of invisibilizing the work, intellect, and courage of women, especially Black women. Another case of the blind unconsciousness of industrial AI, whose actors seem to suffer from amnesia in a way that is strikingly similar to the widespread notion of information and data as a-historical, neutral, or even innocent. Of course, having this discussion about the visibility, authority, and credit of AI criticism from an angle that takes these patriarchal patterns into account does not mean that these women and their politics should be praised uncritically. Rather, it is a matter of revealing the relational pattern of who is recognized and who does the invisible and precarious work of laying the groundwork for critique to be expressed safely.

As the wheel continues to turn, US President Biden said in a recent meeting with the CEOs of industrial AI companies such as Microsoft and Google that AI is “extremely powerful and extremely dangerous” and that he hopes the CEOs can “enlighten us to tell us what is most necessary to protect society.”[3] To demand that the owners of problematic technologies solve a problem to which they have contributed is as undemocratic as it is absurd. This is perhaps not criti-hype, but it is consistent with the logic of a cycle that returns power to US capital to solve a problem for which it is largely responsible.

Perhaps less obviously, I think Biden’s call also points to a dynamic that has been so thoroughly explored by scholars of science and technology studies that it has become almost a truism in their discipline: that “AI” is morphing into an infrastructural quality and becoming normalized in the process, which in turn means that its backbone must become invisible.

While the entanglement of human–machine relations is probably experienced by everyone in everyday life—in both helpful and troublesome ways—technology is still presented in the mainstream imaginary as standing outside of social relations, as the big Other. It is interesting to see how technology can be redirected in many ways depending on the time and context: When we need it to be invisible, it is just a procedure, a mere tool, but when it is meant to mobilize attention and emotion, it is quickly anthropomorphized in the form of, say, feminized servant robots or apocalyptic Terminators, as Alexandra Anikina recently has described poignantly. 2

The normalization of “AI” thus renders invisible the question of what version of humanness we currently have and who it excludes. As described by many, the political imaginary of AI as a slave to human needs, as a machine that might one day rise up against its human creators, is deeply colonial and patriarchal, and is always based on the notion that Others are inferior, as executors that need to be controlled, oppressed, and exploited.[4]

It is useful to relate these mechanisms of in/visibility to what Neda Atanasoski and Kalindi Vora have called “surrogate humanity.” By this they mean technologies such as AI or robotics that serve as substitutes for human labor within a labor system anchored in racial capitalism and patriarchy. The idea is that certain groups, such as women, Black people, and people of color, are replaced by technology or marginalized from technological advancement. Surrogate humanity is therefore a form of dehumanization and displacement that marks the exclusion of human beings from the domain of the human.[5]

This exclusion is particularly evident in the ways that impoverished as well as marginalized communities are targeted and harmed by the policies and practices of technology companies such as predictive policing, while at the same time bringing chatbots such as ChatGPT to life through their (gig) work.[6]

Ultimately, the debate about a future superintelligence obscures the mundane realities of valorizing free labor social media output within a regime of AI production:[7] many machine learning algorithms are trained on content scraped from the Internet. It is not so much a scientific breakthrough in new AI techniques as the development of more efficient computer architectures and chip designs, which are therefore responsible for the so-called Deep Learning Revolution, including Large Language Models (LLMs) such as Chat GPT. It was not until this new hardware made it possible to use concentrated data on a larger scale and run deep-learning algorithms on It, that the desired results could be achieved—with both the hardware and software in the hands of a few technology companies, including huge profits.[8]

The strategy of scraping the labor and content that other humans have put online has proven very attractive for industrial AI. The tools they have developed are attractive to companies because they can save on paying and employing actual human writers or artists while extracting their work and that of gig workers in Kenya for the chatbot to appear “smart,” just as many other invisible gig workers have done for many other supposedly intelligent machines.[10] Clearly, the turning of political problems into problems of information technologies seeks to make this invisible. We need to ask ourselves who benefits from these systems and who is excluded. In this debate it is also essential to recognize the (care) work required to maintain these systems. Everyone wants to build new AI tech systems, but at what costs are we to maintain the infrastructures that are already in place?

Fig. 4: A crashed balloon from the Google Loon project, in a Mexican field, 2019.

Venture Hacker-philanthropy for a Better World, Faster

Returning to Biden’s call and the question of whether US tech companies have done a good job of protecting in the present and in the past, I want to introduce two types of tech intervention that are interrelated: solutionism and longtermism. Since the current debate about AI and who gets to save the world is deeply moralistic, it makes sense to make a connection to other major tech company-led activities for purported world-bettering improvement and tech philanthropy.

Considering that any system is only as good as the (Californian) ideologies surrounding its development, I would like to venture that these interventions essentially bring more harm to the world, both in the past, present, and in possible futures.

The rise of tech companies has led to the return of “solutionism,” the idea that technology can solve all sorts of problems.[11] At the same time, tech conglomerates, which have become extremely powerful institutions of private wealth, began to engage in philanthropy, but essentially were abandoning the traditional notion of monetary donations. The Bill & Melinda Gates Foundation developed a new philanthropic strategy early on. This new form of philanthrocapitalism, or “venture hacker philanthropy” as some have called it, is not about investing in name-laundering, the “good old” naming of libraries, schools, or hospitals after generous donors.[12] Instead, they are involved in the fight against child mortality, climate protection, and education against poverty. No money is donated, but venture capital is used to promote concrete implementation, with independent accompanying research.

In this worldview, technology is usually lauded as a universal tool to save the world, but the technology itself has no face, it is not humanized, as in the tone of the open letter—in which the machines are understood to imminently take over the world. This tech philanthropy is a form of capitalism that aims to do good and create a better world through the use of technology. In this manner the lines between for-profit and not-for-profit entities, between foundations and corporate social responsibility departments, and between charities, social enterprises, and non-governmental organizations become increasingly blurred.

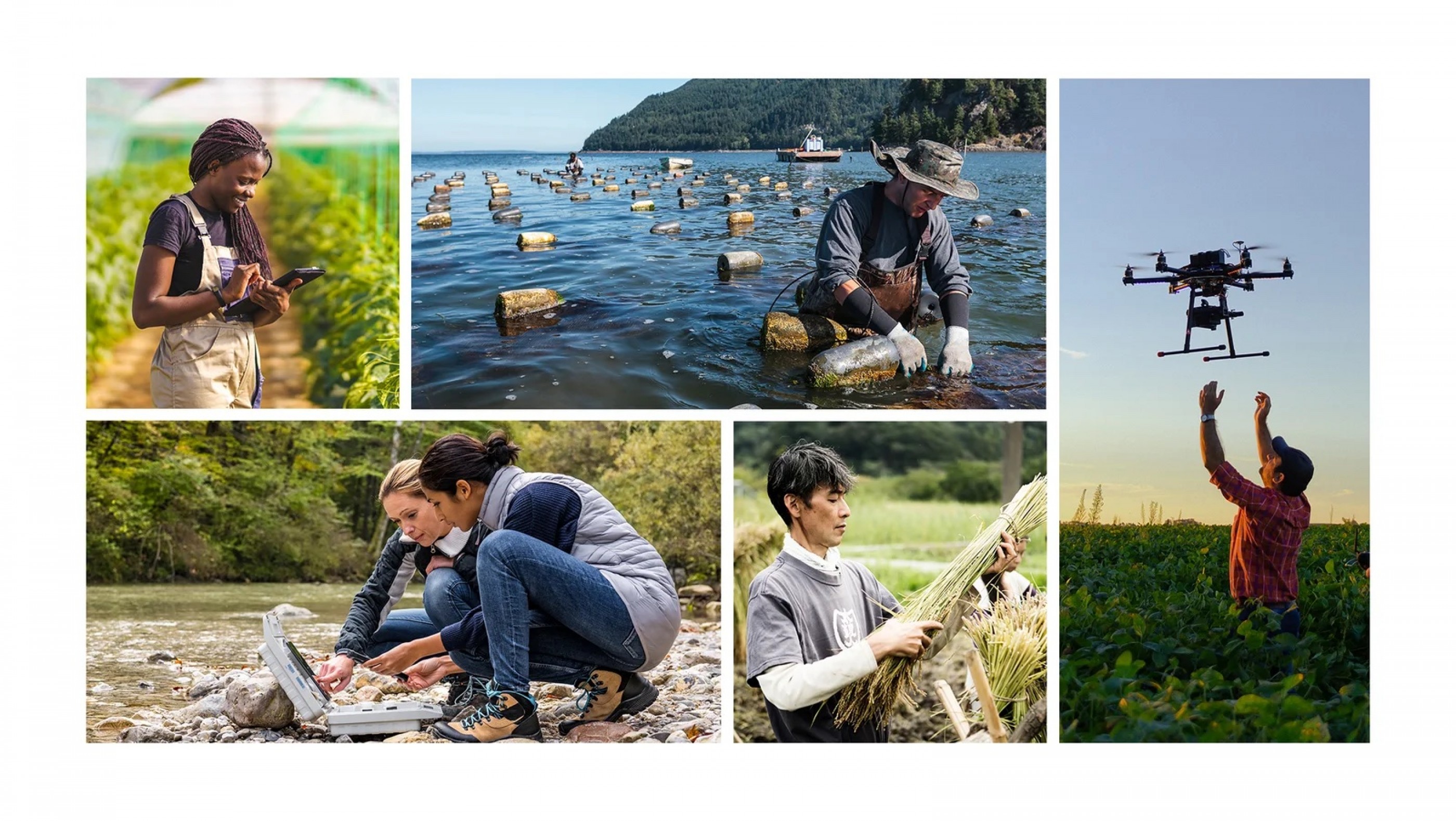

Fig. 5: Publicity images of Google's CSR work, from Google.org: https://www.blog.google/outreach-initiatives/google-org/ideas-to-drive-climate-action/

Universal Scales of World-bettering

Large technology companies such as Google, Meta (née Facebook), and Microsoft have increasingly launched philanthropic initiatives in the Global South, often portrayed as efforts to provide technological solutions to social and environmental problems. While the companies are active, i.e., solving problems, innovating, etc., the regions and people of the Global South are the passive recipients. This asymmetry is not problematized and is reminiscent of colonial logics of power. Shoshana Zuboff, for example, who has written on surveillance capitalism, refers to Africa as a mere recipient of surveillance technologies and often uncritically reproduces the claims of bold marketing-speak, which is why Lee Vinsel has used her work as an example of academic criti-hype.

Google.org, the philanthropic arm of Google/Alphabet Inc, is an example of this approach. Its website says it wants to “bring the best of Google to support underserved communities and create opportunities for everyone, by combining funding, innovation and technical expertise.”[13] But who is this universal “everyone”? My own research on coding boot camps in refugee camps in the Middle East has shown how many of the students’ dreams and hopes, such as moving to the US or Canada to work in tech headquarters, didn’t not come to fruition; instead, they stayed where they were, doing remote online work that was paid by the project, piece, or hour, and offered no protections of workers’ rights. This raises questions about the impact of philanthropy, including how it affects the autonomy and agency of those targeted by such interventions, and how it reinforces existing power imbalances. Ultimately, it is labor that moves, not people.[14]

In academia, these activities have been criticized by feminists and scholars of science and technology studies, who argue that idealized visions of technology and progress are fundamentally linked to the exclusion and dehumanization of certain groups. Feminist scholars have long argued that technology is not neutral and that it reflects and reinforces power relations in society. For example, Donna Haraway has shown how technologies are situated and shaped by the specific social and historical contexts in which they are developed and used. In the context of philanthropy in the Global South, this enables us to perceive how technologies developed and deployed by large technology companies have been shaped by power dynamics between the Global North and South. As a result, technologies tend to reinforce existing inequalities rather than address them.

Initiatives such as Meta’s (formerly Facebook’s) Free Basics program, which provides limited Internet access in the Global South, have been criticized for reinforcing existing power relations by granting a few powerful companies control over access to information.[15] Google’s Project Loon, meanwhile, which used balloons to provide Internet access in remote areas, has also been criticized for not taking into account the underlying social and economic factors that contribute to the digital divide. In addition, these initiatives often do not consider the gender and racial dimensions of technology and power.

For example, women, Black people and people of color are often excluded from decision-making processes related to the development and deployment of new technologies, resulting in technologies that reflect the perspectives and interests of a small group of developers. This has many implications. Technologies designed to increase agricultural productivity can reinforce the gendered division of labor by favoring male-dominated forms of agriculture. Similarly, technologies developed to address health problems can ignore women’s specific health needs and concerns because the data on which they are based often come from middle-aged white men. This harms marginalized communities by reinforcing gendered and racialized divisions.

The forms of solutionist tech-utopianism hitherto described, which all aim to make the “world a better place, fast” through their intervention, are directed toward the present.[16] The ideological contradiction between this presentism and the visions propagated by longtermist organizations such as the aforementioned Future of Life Institute, which are concerned with saving humanity’s distant future, should be obvious. The Future of Life Institute, the organization responsible for disseminating the open letter to pause AI development, is a transhumanist and longtermist organization that promotes the idea that the long-term impact of emerging technologies need to be considered, especially of AI, because they could have catastrophic consequences for the future of humanity. While this does not sound unreasonable at first glance, the problem is that its proponents are more concerned about a distant hypothetical techno-utopian future than they are about the real-world problems that the use of technology is causing today, such as the exploitation of workers, the reproduction of systems of oppression, the concentration of power, and the detrimental effects of technological energy consumption.

Longtermism is a powerful ideology, whether people use it as a tool (and do not believe in it themselves) or really believe in it, they are either way interpellated into a larger bundle of ideologies, as Émile P. Torres, philosopher and former longtermist, has pointed out. The ideas of longtermism are closely related to those of transhumanism, the idea of improving human longevity and cognition. Transhumanism is inherently discriminatory because it defines what an improved human is, creating a hierarchical concept that mimics first-wave eugenics, as Timnit Gebru has stated.[17]

This eternal return of eugenics in computing, as Torres has called it, is gaining momentum in the jargon of “human flourishing,” of “maximising potential by breeding those who are desirable.”[18] The open critique of this eugenic genealogy has sparked controversy in the AI community concerned with fair, accountable, and ethical AI, such as the Conference on Fairness, Accountability and Transparency (FAccT).[19] In addition, as always, there is a longer, well-informed history of criticism and resistance, especially from Black women who have left tech or academia for speaking out and making power dynamics visible. One figure worth mentioning in this conjuncture is J. Khadijah Abdurahman, who is—among other things—the editor of Logic(s), the first Black and Asian queer tech magazine, and who has extensively critiqued the computer science community with knowledge and wit, coupling her critique of the persistence of racial discrimination in the tech industry with an analysis of political economy.[20]

Tech companies in all sectors have a common desire, rooted in the material needs of their business models as infrastructures for labor and resource extraction, a key foundation for their profits: the world as programmable, or the world highly digitized so that operations can be performed: “The Amazon dream of autonomous drones that can deliver parcels or the Uber dream of autonomous cars that can transport passengers are only possible in a world in which multiple overlapping spaces, activities, and processes are highly digitally legible.”[21]

Fig. 6: Amazon warehouse workers outside the National Labor Relations Board, New York City, New York, U.S. October 25, 2021

Claim-making in present and future tense

In her analysis, Louise Amoore argues that predictive policies driven by algorithms exclude possible futures by attempting to secure against uncertain outcomes or in short, by determining that uncertain futures must be controlled by turning them into calculated possibilities. This, she argues, undermines the very essence of political life, which is to make difficult choices in the face of uncertainty and to determine how we live together as a society. Algorithms, themselves shaped by normative ideas, in turn shape notions of what is good, normal, ideal, transgressive, and risky, and increasingly become a proxy for real political struggles and debates.[22] This equally excludes other potential present and future perspectives of relating alike.

In presenting the aforementioned interventions by US technology corporations, I wanted to discuss how both—by making claims about the present and the future—aim to secure authority over what is at stake, who deserves to save humanity, now and then, and what forms of humanness are included and consequently excluded. While human lives are hurt and profits are made every day, supposed worlds and an abstracted, endangered “humanity” are saved by tech philanthropy. In some ways, this could be seen as an update of the “Californian ideology.” A paradoxical mix of contradictory beliefs of the political left and right as guiding principles of Silicon Valley, with technological determinism and tech utopianism as common ground.[23]

Perhaps the most apt embodiment of this contradictory bundle is Jeff Bezos. While Amazon workers in New York were organizing to unionize in 2021, Bezos was busy with his space exploration projects.[24] While the world’s richest man sought to colonize other planets and leave behind this one, his employees were organizing and advocating for better working conditions on the planet he has been instrumental in degrading. As absurd as this sounds, this is today’s reality.

“They are largely right,” Bezos said of critics who say billionaires should focus their energy—and money—on issues closer to home. “We have to do both. We have lots of problems here and now on Earth and we need to work on those, and we always need to look to the future. We’ve always done that as a species, as a civilization.”[25]

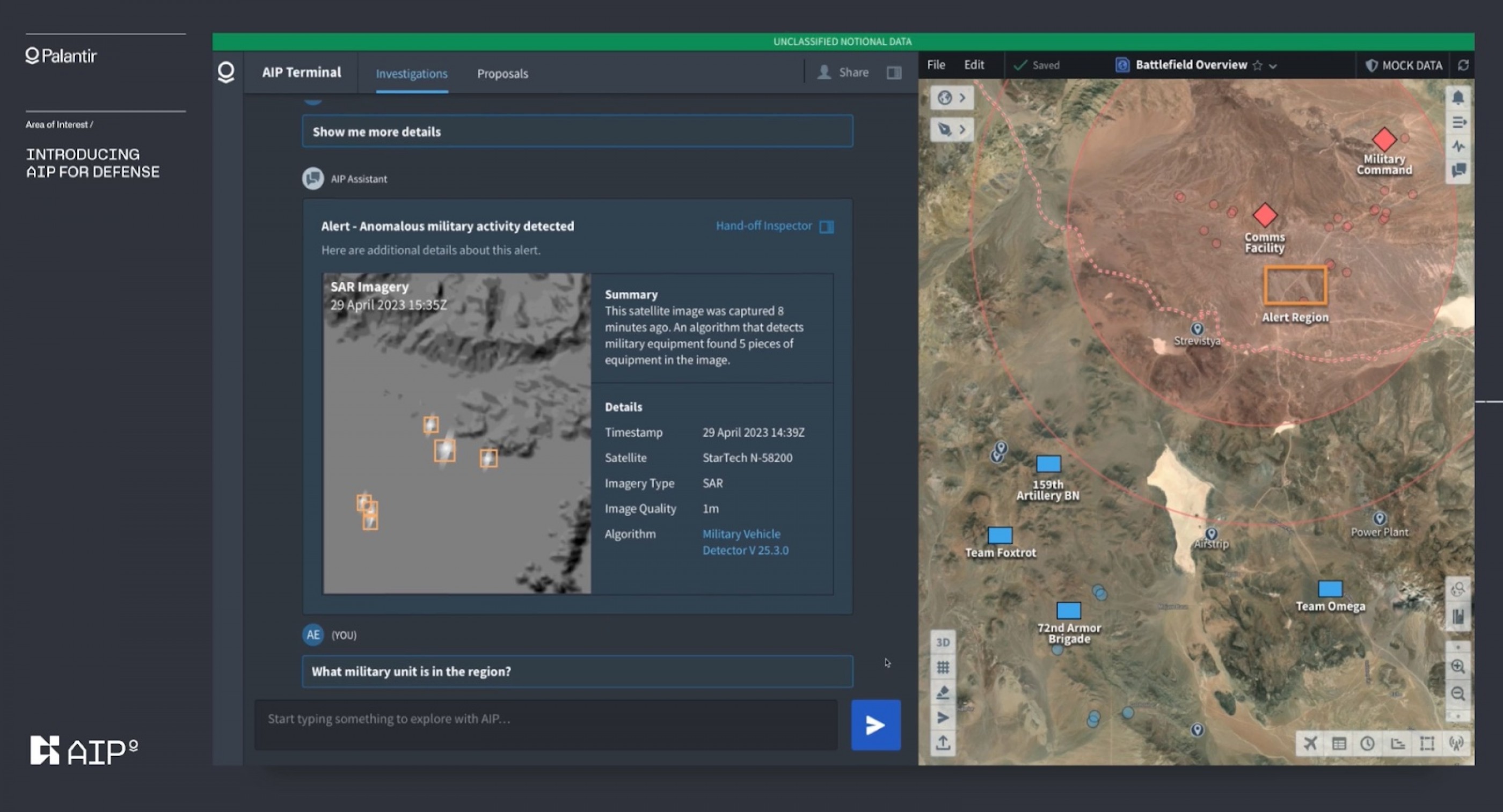

Fig. 7: Screenshot of Palantir’s promotional video with an interface that looks strikingly similar to ChatGPT’s

Dissident counter cultures of organizing

Billionaires, who cultivate a venture-esque philanthropy mentality, are increasingly portrayed and understood as those who bear the responsibility for saving the planet, as representatives of human civilization and our species as a whole. This is worth dwelling upon: the gradual shifting of significant political power, infrastructure and scientific research and discursive authority to tech capital. It shouldn’t be up to such figures to imagine how to live both in the present and future.

By naming these changes and resisting these processes through organizing, social movements, and counterculture in the tech industry and academia, a thorough and informed tech critique, as Meredith Whittaker described for the US context in 2021, “has already powerfully influenced public discourse and the global regulatory agenda in ways that tech firms are actively resisting.”[26] She states that this critique has also moved beyond simplistic ideas of bias and into examining how technology reproduces racial marginalization and concentrates power in the hands of those who can afford to develop and use AI infrastructures.

A scholarship and movement practice that is, as Whittaker writes “attentive to racial capitalism and structural racism has provided many of the methods and frameworks core to critical work engaging the social implications of tech” is also crucial here.[27] The debates that have developed in the last few years about “dangerous AI” in the European context, mimic the US discourse and are too often just that: a superficial preoccupation with Big Tech, like the discourse on “ethical AI” that fails to address the root causes of discriminatory technology. Another reason is that technology companies operate across countries. For this reason, I share a recent case in Germany that came to my attention thanks to an open letter initiated by my colleagues Charlotte Eiffler and Francis Hunger, as well as Su Yu Hsin, Gabriel S. Moses, and Alexa Steinbrück.

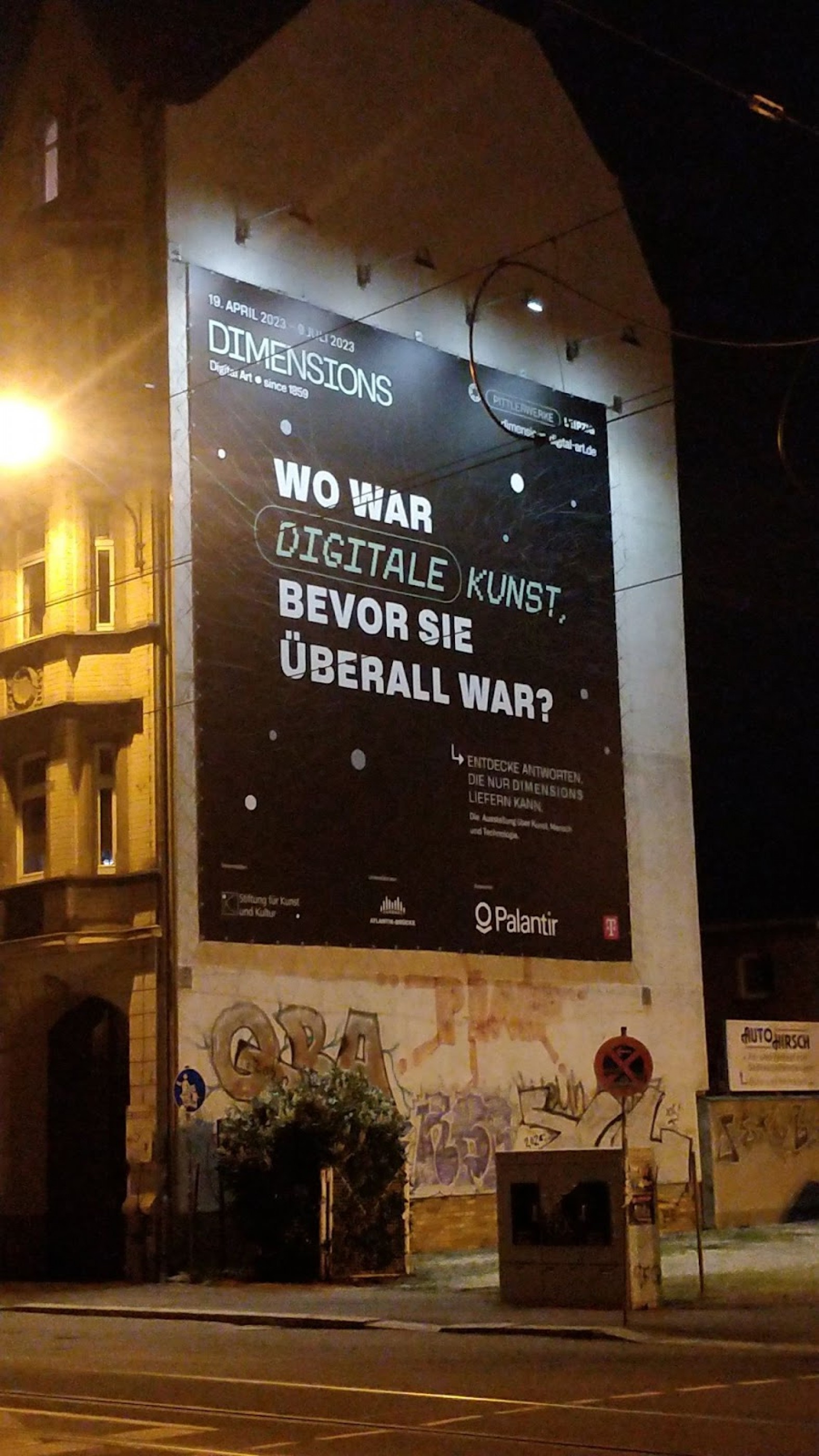

Fig. 8: Alexa Steinbrück, Twitter, the photo was accompanied by this text: “Not only #artwashing, but also #ethicswashing:In addition to the @PalantirTech-sponsored art exhibition in #leipzig, a symposium is held to discuss the risks of #AI. They express concern about the loss of “ethics and security” in the context of the “AI arms race”

The controversial US CIA-backed tech company Palantir years ago began to equip German police departments with its Gotham software. Now, in 2023, it is sponsoring an exhibition in Leipzig called “Dimensions—Digital Art since 1859” and “appears to be an attempt to distract the public from any political dimension of the digital by negotiating the history of the digital primarily as an aesthetic phenomenon,” as the open letter states. They criticize that by that, any questions about democratic control of surveillance technologies and the appropriation of data by corporations like Palantir may hopefully be evaded.”[28]

In addition to the exhibition, the program also includes a symposium. In another iteration of criti-hype, curator Walter Smerling employs an alarmist tone to warn against misinformation and propaganda by LLMs like ChatGPT. Clearly, they have jumped on the hype about “ethical/safe AI”. Just two weeks ago, Palantir released its new “AIP” and “AIP for Defense” software that integrates AI into military decision making for its operations—ironically based on LLM, generative AI like ChatGPT that is supposedly so dangerous.

In the first few seconds of the same promotional video we read that their claims are actually just predictions and thus involve uncertainties and risks:

Contact your Palantir representative to learn more. This video contains “forward-looking” statements within the meaning of the federal securities laws, and these statements involve substantial risks and uncertainties. (…) Forward-looking statements are inherently subject to risks and uncertainties, some of which cannot be predicted or quantified. In some cases, you can identify forward-looking statements by terminology such as “guidance,” “expect,” “anticipate,” “should,” “believe,” “hope,” “target,” “project,” “plan,” “goals,” “estimate,” “potential,” “predict,” “may,” “will,” “might,” “could,” “intend,” “shall,” and variations of these terms or the negative of these terms and similar expressions. You should not put undue reliance on any forward-looking statements. (Emphasis mine)

Palantir announced that it expects to turn a profit in every quarter through 2023 due to overwhelming interest in its new artificial intelligence platform. As a result, Palantir shares rose more than 20% in extended trading. Truth be told, Palantir says it itself: Don’t believe the hype, because the “forward-looking” future is risky and uncertain. Still, criti-hype has sent their stock value soaring. In Palantir claims-making, AI is dangerous for society when used for products like ChatGPT, while AI for their own products is nothing more than a sophisticated stochastic tool, a mere process.

[1] www.futureoflife.org/open-letter/pause-giant-ai-experiments/

[2] It is important to note that this observation is not restricted to journalism and media, but also, as he argues, has become more widespread in academia, something that is very relevant for anyone engaged with critical AI research.

www.sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

[3] www.twitter.com/POTUS/status/1654237472065302528

[4] See for instance Ayhan Ayteş, “Return of The Crowds: Mechanical Turk and Neoliberal States of Exception,” in: Digital Labor:The Internet as Playground and Factory, ed. Trebor Scholz (Routledge, 2012).

[5] Neda Atanasoski and Kalindi Vora, Surrogate Humanity: Race, Robots, and the Politics of Technological Futures (Duke University Press, 2019).

[6] www.time.com/6247678/openai-chatgpt-kenya-workers/

[7] Tiziana Terranova, “Free Labor: Producing Culture for the Digital Economy,” Social Text 63, Vol. 18, No. 2 (Summer 2000): pp. 33–58.

[8] On the term Deep Learning Revolution see Terrence J. Sejnowski, The Deep Learning Revolution (MIT Press, 2018).

[9] www.twitter.com/KarlreMarks/status/1658028017921261569

[10] www.artisticinquiry.org/AI-Open-Letter

[11] “The term ‘solutionism,’ usually in the form of ‘technocratic solutionism,’ has been in use for many decades and means the belief that every problem can be fixed with technology. This is wrong, and so ‘solutionism’ has been a term of derision,” as scholar Jason Crawford writes.

www.technologyreview.com/2021/07/13/1028295/proud-solutionist-history-technology-industry/.

[12] A new book by Linsey McGoey reveals the influence over public policy that a massive philanthropy can wield. Michael Massing, “How the Gates Foundation reflects the good and the bad of “hacker philanthropy,” The Intercept, www.theintercept.com/2015/11/25/how-the-gates-foundation-reflects-the-good-and-the-bad-of-hacker-philanthropy/.

[13] See www.google.org/.

[14] A. Aneesh, Virtual Migration. The Programming of Globalization (Duke University Press, 2006).

[15] Toussaint Nothias, Global Media Technologies and Cultures Lab MIT, www.globalmedia.mit.edu/2020/04/21/the-rise-and-fall-and-rise-again-of-facebooks-free-basics-civil-and-the-challenge-of-resistance-to-corporate-connectivity-projects/#:~:text=Their%20main%20criticism%20was%20that,services%20and%20local%20start%2Dups.

[16] Google for Developers, Google I/O 2015—Tech for a better world, faster: A discussion with Google.org’s social innovators, www.youtube.com/watch?v=DcezrMhwaSs

[17] Timnit Gebru, ‘Utopia for Whom?’: Timnit Gebru on the dangers of Artificial General Intelligence, The Stanford Daily, www.stanforddaily.com/2023/02/15/utopia-for-whom-timnit-gebru-on-the-dangers-of-artificial-general-intelligence/.

[18] For further reading into the debate of longtermist philosophies such as those propagated by Nick Bostrom, I want to refer to the article in this current UMBAU issue by Yannick Fritz. Further, this twitter thread by Torres gives a good overview on the various bundle of overlapping ideologies that Torres and Timnit Gebru have started to call “TESCREALism,” an acronym that stands for Transhumanism, Extropianism, Singularitarianism, Cosmism, Rationalism, Effective Altruism, and Longtermism, www.threadreaderapp.com/thread/1635313838508883968.html

[19] See www.facctconference.org/. In particular, Timnit Gebru reported how a paper that mentioned the persistence of eugenic ideologies in computer science culture got rejected by the reviewers for this year FaccT conference www.twitter.com/timnitGebru/status/1644838301407522817

[20] See for instance J. Khadijah Abdurahman, FAT* be Wilin’. A Response to Racial Categories of Machine Learning by Sebastian Benthall and Bruce Haynes, Medium, www.upfromthecracks.medium.com/fat-be-wilin-deb56bf92539, J. Khadijah Abdurahman and Xiaowei R. Wang, Logic(s): The Next Chapter, www.logicmag.io/logics-the-next-chapter/

[21] Graham Woodcock, The Gig Economy. A critical introduction (Polity, 2019), p. 25.

[22] Louise Amoore, The Politics of Possibility: Risk and Security Beyond Probability (Duke University Press, 2013).

[23] This was described in the iconic text of the first wave of Net criticism by Richard Barbrook and Andy Cameron in the 90ies. Richard Barbrook, Andy Cameron, “The Californian Ideology” (condensed version), Mute, 1(3), (1995), www.metamute.org/editorial/magazine/mute-vol-1-no.-3-code.

[24] www.amazonlaborunion.org/.

[25] www.edition.cnn.com/2021/07/20/tech/jeff-bezos-blue-origin-launch-scn/index.html.

[26] Meredith Whittaker, The steep cost of capture,in: INTERACTIONS.ACM.ORG, www.papers.ssrn.com/sol3/papers.cfm?abstract_id=4135581.

[27] This text is largely based on US contexts and yes, that sucks because in a way, it reproduces centering on Silicon Valley narratives claiming that high-tech is produced in the West and applied to “Rest.” However, I think it is important for European and German contexts to be aware of the debates emerging from there.

About the author

Published on 2023-06-19 13:30